Speculative Code: Mediating the Virtual-Reality of Emergent

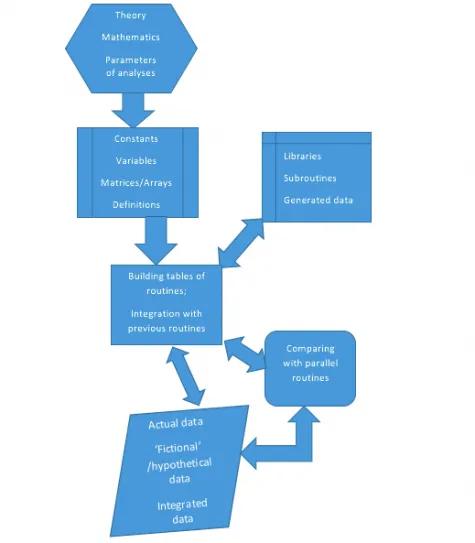

Figure 1 Modelling of a Generic Scientific Data Simulation and Generation Process

Unlike written human language, computer programming language is designed to automate selected actions to produce an expected, or predicted outcome. While digital infrastructures are now able to support language processing that approximates the ‘natural language’ forms of human communication, the most powerful programming language has direct and full control of the hardware (the instrument and machine) and requires the least amount of translation to machine language. Hence, a language with the greatest proximity to the logic and scale of the hardware it is communicating with would have the most powerful edge over the machine. Despite the availability of several language packages for word processors, given the evolution of text encoding technology to support characters of varying levels of discreteness, the syntax command only exists for the most discrete of character forms, namely the Roman alphabet and Hindu-Arabic numeral systems – both of which are translatable to ASCII and EBCDIC. Although the computing world has reached a point where it could produce user-oriented APIs and contextually-aware programming languages for developing content platforms that sync to ubiquitous mobile devices, the advent of the quantum computer requires a reconstitution of the idea of a (quantum) scalable machine code, a return to the concept underpinning the first generation of programming languages.

For a sense of how code is a discrete yet material embodiment of logico-mathematical inscriptions, we can keep in view the evolution of human language, in developing from a rudimentary description of daily life to complex grammar systems before reduction to a form of pure logic. The sophistication of expression evolves into the production of notational units that could depict complicated ideas with fewer bits and strokes – think of the coming of the simplified Chinese ideogram/pictogram and the Korean Hangul. Logic, with its ability to provide precise articulation of abstract concepts, could only be produced once language cultures peaked in their sophistication. In human-computer interactions, the needs of the computer have to precede that of the human, given the latter’s current limitations. However, what the computer can rapidly process, the human requires time to learn and adapt to because such a ‘simple’ logic system is not part of our ubiquitous knowledge system. Nevertheless, logical notations represent causal relationships that convert and transform basal properties – ones that might not be compelling in their raw forms – into novel outcomes.

Simplicity emerges from complexity; the same is true for code – code as semiotic referent to an n-decimal system. As culture develops to the point of having rich natural language to communicate messy interactions and occurrences in the natural world, we also become interested in reducing these interactions into definable predictions, as far as the mediation between the knowns and unknowns go, so that the phenomenal anomalies seemingly observed could then be re-interpreted into possible descriptions or prescriptions for the newly discovered, and still-being-understood, entity.

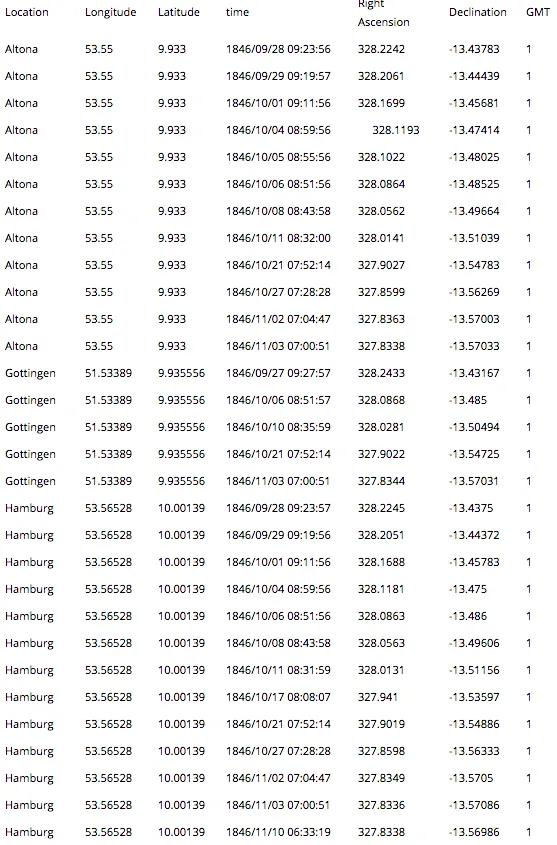

But what preceded code, and remains intrinsically bound to code, is mathematics. The nineteenth century saw both the genesis of code, through the work of Ada Lovelace, and predictions made from abstract mathematics rather than direct observation, such as in the prediction of a cosmological unknown (later identified as the planet Neptune) made in parallel by French astronomer Urbain Jean Joseph Le Verrier and British astronomer John Couch Adams.[1] Astronomy had thrived through the accumulation of systematic computations, in the form of mathematical tables and ephemerides, of observable galactic systems and clusters over the centuries, so much so that the first group of female technicians trained to do these tedious yet intricate calculations were known as ‘computers’. Lovelace had actually thought that the Analytical Engine could be programmed to serve the needs of such computations, and much more.

Table 1 The observations of the planet Neptune across 5 locations in Europe are tabulated here. The right ascension was computed to azimuth, while the declination to altitude, when converted to Cartesian coordinates, as could be seen in the visual below.[2]

When Lovelace wrote up the notes[3] that would inform the philosophical, mathematical and logical foundation of the programming languages of today, she already knew the importance of delving particularly, and with the keenest of attention, into the mechanism governing the production of numerical/quantitative computation, rather than taking the mechanism for granted. She was prescient when she pointed out that a lack of standards (and documentation) could lead to complications and potential falsification of reasoning and results – the lack of standards leads to the shiftiness of sign conventions, which Lovelace also noted. Most importantly, she understood the significance of how one would code the mathematical notations. This meant determining whether to produce cycles of arithmetic or algebraic operations in order to perform calculations and computations that do not require the use of higher-order intelligence (yet are difficult for a highly intelligent human to do without error), or to animate the ‘fictional’ and speculative equations and formulas that are mathematically elegant but could have neither immediate utility nor physical possibility.

While astronomers such as Le Verrier and Adams were making mathematical predictions about what would be Neptune, Lovelace was already thinking about how one could refine such predictions by creating a program that could operationalize abstract analytical functions to receive and generate data, while at the same time, dwelling more deeply into the mechanisms and functions of these operations. Such an imaginative force is a precursor to the Monte Carlo method for computational simulations of the big scientific data we understand today – a strategy that had been put to good use by astrophysicists and cosmologists who could perform experimental cosmology by simulating the data they were able to collect through their detectors and observatories, while making predictions in order to make further detections. Lovelace imagined what all these could mean for the Analytical Engine, which was still a speculative footnote at that time. Her notes pointed to an understanding of the practice of reduction for breaking down complex problems into workable steps before the solutions are reconstructed into their original, highly-complex, form.

Figure 2 Matlab simulation of the the ephemerides from Table 1 – this provides an earth-bound view of Neptune in 1846 that confirmed its prediction (simulation by Wai Sern Low)

The practice of reduction is the result of making sense of the context and implications of the Anthropocene, from the time when global warming was acknowledged (and hence discovered), as documented in Weart’s The Discovery of Global Warming. The book describes a difficult and obscure struggle to get the scientific community to attend to observed systemic fluctuations at the macro-scale, including the prediction of meteorological shifts. It was not until climate scientists were able to code and model with thousands of data points from various meteorological base stations, satellite telemetries and various atmospheric as well as geophysical detectors, and produce data generated by turning manual algorithms derived from analytical formulaes, that the rest of the scientific community, policy-makers, and members of the public began to take climate change seriously. High resolution imaging involving thousands to millions of lines of code made climate change more plausible and imaginable to non-scientists.

Figure 3: Simulation of the Ozone layer at approximately 20 km above sea level from a single year daily data sets. The level of concentration has been color coded for visualizing the layer of depletion of the ozone layer at this atmospheric height. Global 2015 ozone fata from World Data Centre for Remote Sensing of the Atmosphere. Simulation in MatLab by Wai Sern Low.

When machine language was created to run chamber-size ‘supercomputers’, the code was both reductive (in the same way that the choice of syntax was pared down to the basic function of an electronic computer, operating by a duality of ‘on’ and ‘off’ switches), yet complex for a human used to working with more imprecise expressions. The precise language that codifies the numerical processes for simulating the non-linear processes of classical physics enables the automation of targeted tasks. In due course, more ambitious speculations of possible outcomes, including boundary-testing in problematic areas, could now be formalized.

Presently, as opposed to a mere observation of leftover traces of visible tracks left by an interaction between a microphysical entity of interest (or a cosmic entity) with an environment perceptible to the human observer, we could now penetrate the invisible (and largely abstract) world of entities that produced big science – big science as represented by infrastructures made possible because code could remotely control and place infrastructures in the most uninhabitable or extreme environments. This ability lets us think about underground dungeons where micro-particles collide and decay into more exotic micro-particles, as well as engage in speculative activities in the name of ground-breaking science (from studying dark matter to studying colliding particles), defense (think missile offensives), and well-being (the use of drones for bringing aid to difficult terrains, and medical imaging technology for examining the usually inaccessible parts of human anatomy).

Big Science + Code + infrastructure beyond the human scale (ranging from the cosmic to the subatomic) = justification for speculative science.

Code includes checks (while these checks are not foolproof, they are mostly reliable) to ensure that mathematical and physical standards are not violated in the eagerness of offering explanations, or prescribing searches, for novel entities within determined parameters. Although, these checks don’t yet make the matching of statistical data to theory more seamless, such as when the same set of code becomes both the procedure for deciding on the direction of the search and the method used for verifying data generated from the search.

Speculative searches are legitimized and valorized by the corporeality of code – code that could only be developed by taking computable parts of abstract analytics, or that reformulates abstract analytics into a computable form contributing to the construction of algorithms. Code designs the narrative structure that provides impetus to a scientific work, mediating between background and signal in order to herald a potential discovery, and therefore, also mediating between the data and instruments that detect, filter, and define the parameters for data points that signal or predict an emergent entity. Although code is not necessary for scientific predictions to be made, what code does is to change how that prediction is made – by setting one’s understanding of the predictive theory in relation to the empirical terrain that one does not yet understand, and to develop what Alfred North Whitehead would refer to as concrescence.[4] Concrescence is the emergence of an unprecedented prehension stemming from the integration of prehensions – a feedback loop of informational coalescence, integrating the theoretical, experimental, and phenomenological to contribute insights stemming from these combinations back into the informational system for producing ever more insights. The loop does not stop until a set of conditions are fulfilled. The ontology of a predicted physical phenomena is no longer an abstract force that appears symbolically, but contains operational value.

The media-famous Higgs boson was discovered when its mass function, which is the probabilistic spread of energy produced when a predicted interaction between sub-protonic particles (such as quarks) and other bosons such as Z and W±, produce signal peaks, within degrees of statistical certainty and confidence. The peaks are indeed indicative of the long sought-after confirmation of a prediction made within the standard operating framework of particle physics, known as the Standard Model, with a promise that further investigation into that mass spectrum could unearth more clues on the Higgs and even what its existence could reveal about a different kind of physical symmetry. These rare decays, in turn, will lift the veil further on the pre-Anthropocenic world.

The Standard Model presents the mathematical narrative of subatomic units known as fermions and bosons of which the Higgs boson is a part. That mathematical narrative could then be reconstituted as diagramed interactions (known as the Feynman diagrams) that are turned into algorithmic steps plugged into simulation packages such as the aforementioned Monte Carlo method. The bosons, known as vector particles/mediators, consist of photon, gluons (a group of mathematical entities conceptualized to fulfil the laws of conservation of energy), and weak bosons known as W± and a Z0. The now confirmed discovery of the Higgs boson is a scalar (spin 0) boson that provides quantifiable explanation of why certain bosons have mass while others do not, a process aided by the Higgs mechanism, also known as the Anderson-Brout-Englert–Guralnik-Higgs-Hagen-Kibble-‘tHooft (ABEGHHKtH) mechanism. What the mechanism does is to contain the possibility of infinitely huge divergences caused by the interactions of massive bosons by bringing about symmetry breaking.

Code is able to identify and tag signal peaks that would tell the physicists how and where to focus the cut-off points of their searches to improve on the choices of initial conditions. In knowing where to enact the cut-off points (a process known as the trigger), including identifying areas of microphysical interactions forbidden to the production of the Higgs boson, code signifies and identifies the normative while defining the selections to be ignored or even mulled over if the anomalies, the categorically queer, prove interesting. Since the search for the Higgs boson has succeeded after its initial prediction more than 60 years prior, physicists are now ready to tackle more exotic micro-entities, not just in the high-energy scale, but within the meso-scale region of the atoms, ions, and molecules.

Units of confirmation are reconstructed from tracks produced by decaying subparticles, although not all entities could decay quickly enough; some take such a long time that they might outlive most, if not all, civilizations, therefore remaining forever undetectable! The mathematical representations of physics interactions detected by remotely controlled instruments are then reconstructed with the aid of code, to be reconstituted into a wholesome confirmation of a discovery. Yet, the entirety of that discovery is predicated on trust over the veracity of code, and the ability of code to assemble available data to demonstrate established knowledge and knowledge gaps, as well as to generate hypothetical data points that would improve the trigger function further. It is the legitimacy of code as the mediator of emergent and novel knowledge, and as a language that could describe a world only observable with the mediation of sensitive detectors, that computational modelling and simulations have become vital to the calibration of physical realities. In the process of making visible the invisible, the ontology of the code, as embodiment of the fundamental physical structures, is revealed through the decoded structures.

It is the comfort derivable from this increasing proximity to what lies under code (‘under the hood’) that assures physicists that scientific rigor can be maintained, and perhaps even increased, when they deploy methodologies enabled by code to explore increasingly speculative possibilities. One such possibility involves simulating what lies behind more than 95% of the universe that appears to have escaped most forms of detection thus far, with the exception of faint murmurs and traces in the form of cosmic signals, dusty halos, and the presence of an ether-like substance in space that perturbs orbiting planets (such as Mercury) and warps light. As had been the case with the Higgs boson, experimental consortiums are digging further into the murmurs of gravitational waves first detected by the LIGO experiment to see if they could trace a more concrete connection to dark matter. The study of dark matter and dark energy is made possible by the aforementioned remotely controlled detectors and scientific infrastructure. This includes code that could take the data that had been collected and turn them into a feature film, such as the 2000 NOVA broadcast, the Runaway Universe, and a more recent clip The Cosmos in the Computer, featuring realistic numerically-driven simulation from data captured by a global consortium of cosmologists, astronomers, and astrophysicists.

Figure 4 When looking at the galactic cloud generated from computerized observatories, one might ponder over how the hypothetical and the factual come together.

Code visualizes the non-material into being, and turns the raw data of unknown quality into a narrative of emergence. Does it matter how that code might be operationalized, whether through transistors, electronic circuitries, nano-chips or quantum information bits? Perhaps the platform through which the code is operationalized could change the outcome of the narrative, or even how far the narrative could go, especially when one is no longer limited by available memory or storage. By turning speculative and explorative science into logical and reasonable narratives, the explorer is now free to peer into the layers hidden within said narratives to look for possibilities hiding in plain sight. Could code take on a life beyond the control of its inventor? This is what researchers into quantum computing and artificial intelligence are striving to discover.

NOTES

[1] Lequex, James, Le Verrier—Magnificent and Detestable Astronomer (New York, 2013)

[2] Observations of Le Verrier’s Planet, In the Meridian, Monthly Notices of the Royal Astronomical Society (7.9), 13 November 1846, Pages 154–157, https://doi.org/10.1093/mnras/7.9.154

[3] The notes, in seven parts, were attached as an appendix of her translation of Menabrea’s short discussion of Babbage’s Difference and Analytical Engines https://www.fourmilab.ch/babbage/sketch.html. The first had already been prototyped while the second remained an idea on paper.

[4] Whitehead, Alfred North, Process and Reality, Corrected (New York, 1978)

—

Clarissa Ai Ling Lee is a research fellow at the Jeffrey Sachs Center on Sustainable Development at Sunway University in Malaysia by day and a science-art designer by vocation. While working on projects on big data and climate change, as well as the deployment of nuclear science and technology to sustainable development, she became interested in how data from the ground, including the conditions producing data gaps, could sustain or challenge developmental policies proposed by local governments and international developmental agencies. At the same time, she saw parallels and points of convergence between her PhD dissertation work on high-energy particle physics with the more macroscopic subject matter of her current research, to show how the different epistemic categories and scales are not always as distinct they seem. She is currently working on a series of projects on emergent thinking and making at the confluence of science-art. She blogs irregularly at http://modularcriticism.blogspot.com

Wai Sern Low is a research associate at the Jeffrey Sachs Center on Sustainable Development at Sunway University in Malaysia and until recently, an undergraduate engineering student. A self-described environmentalist, he is interested in pursuing practical solutions to the systemic climate crises of our time. He is currently working on decentralized energy systems and built-environment related projects. Will also code for food.

Author(s)

Clarissa Lee Ai Ling

Special Studies Division, Jeffrey Cheah Institute on Southeast Asia.

Former Research Fellow (2017-2020)